In recent months, there has been no notable change in inflation uncertainty at either horizon.

Thursday, December 7, 2017

Consumer Inflation Uncertainty Holding Steady

I recently updated my Consumer Inflation Uncertainty Index through October 2017. Data is available here and the official (gated) publication in the Journal of Monetary Economics is here. Inflation uncertainty remains low by historic standards. Uncertainty about longer-run (5- to 10-year ahead) inflation remains lower than uncertainty about next-year inflation.

The average "less uncertain" type consumer still expects longer-run inflation around 2.4%, while the longer-run inflation forecasts of "highly uncertain" consumers have risen in recent months to the 8-10% range.

Tuesday, October 24, 2017

Is Taylor a Hawk or Not?

Two Bloomberg articles published just a week apart call John Taylor, a contender for Fed Chair, first hawkish, then dovish. The first, by Garfield Clinton Reynolds, notes:

...The dollar rose and the 10-year U.S. Treasury note fell on Monday after Bloomberg News reported Taylor, a professor at Stanford University, impressed President Donald Trump in a recent White House interview.

Driving those trades was speculation that the 70 year-old Taylor would push rates up to higher levels than a Fed helmed by its current chair, Janet Yellen. That’s because he is the architect of the Taylor Rule, a tool widely used among policy makers as a guide for setting rates since he developed it in the early 1990s.But the second, by Rich Miller, claims that "Taylor’s Walk on Supply Side May Leave Him More Dove Than Yellen." Miller explains,

"While Taylor believes the [Trump] administration can substantially lift non-inflationary economic growth through deregulation and tax changes, Yellen is more cautious. That suggests that the Republican Taylor would be less prone than the Democrat Yellen to raise interest rates in response to a policy-driven economic pick-up."What actually makes someone a hawk? Simply favoring rules-based policy is not enough. A central banker could use a variation of the Taylor rule that implies very little response to inflation, or that allows very high average inflation. Beliefs about the efficacy of supply-side policies also do not determine hawk or dove status. Let's look at the Taylor rule from Taylor's 1993 paper:

r = p + .5y + .5(p – 2) + 2,where r is the federal funds rate, y is the percent deviation of real GDP from target, and p is inflation over the previous 4 quarters. Taylor notes (p. 202) that lagged inflation is used as a proxy for expected inflation, and y=100(Y-Y*)/Y* where Y is real GDP and Y* is trend GDP (a proxy for potential GDP).

The 0.5 coefficients on the y and (p-2) terms reflect how Taylor estimated that the Fed approximately behaved, but in general a Taylor rule could have different coefficients, reflecting the central bank's preferences. The bank could also have an inflation target p* not equal to 2, and replace (p-2) with (p-p*). Just being really committed to following a Taylor rule does not tell you what steady state inflation rate or how much volatility a central banker would allow. For example, a central bank could follow a rule with p*=5 and a relatively large coefficient on y and small coefficient on (p-5), allowing both high and volatile inflation.

What do "supply side" beliefs imply? Well, Miller thinks that Taylor believes the Trump tax and deregulatory policy changes will raise potential GDP, or Y*. For a given value of Y, a higher estimate of Y* implies a lower estimate of y, which implies lower r. So yes, in the very short run, we could see lower r from a central banker who "believes" in supply side economics than from one who doesn't, all else equal.

But what if Y* does not really rise as much as a supply-sider central banker thinks it will? Then the lower r will result in higher p (and Y), to which the central bank will react by raising r. So long as the central bank follows the Taylor principle (so the sum of the coefficients on p and (p-p*) in the rule are greater than 1), equilibrium long-run inflation is p*.

The parameters of the Taylor rule reflect the central bank's preferences. The right-hand-side variables, like Y*, are measured or forecasted. That reflects a central bank's competence at measuring and forecasting, which depends on a number of factors ranging from the strength of its staff economists to the priors of the Fed Chair to the volatility and unpredictability of other economic conditions and policies.

Neither Taylor nor Yellen seems likely to change the inflation target to something other than 2 (and even if they wanted to, they could not unilaterally make that decision.) They do likely differ in their preferences for stabilizing inflation versus stabilizing output, and in that respect I'd guess Taylor is more hawkish.

Yellen's efforts to look at alternative measures of labor market conditions in the past are also about Y*. In some versions of the Taylor rule, you see unemployment measures instead of output measures (where the idea is that they generally comove). Willingness to consider multiple measures of employment and/or output is really just an attempt to get a better measure on how far the real economy is from "potential." It doesn't make a person inherently more or less hawkish.

As an aside, this whole discussion presumes that monetary policy itself (or more generally, aggregate demand shifts) do not change Y*. Hysteresis theories reject that premise.

Monday, October 23, 2017

Cowen and Sumner on Voters' Hatred of Inflation

A recent Scott Sumner piece has the declarative title, "Voters don't hate inflation." Sumner is responding to a piece by Tyler Cowen in Bloomberg, where Cowen writes:

The whole exchange made my head hurt a bit because it turns the usual premise behind macroeconomic policy design--and specifically, central bank independence and monetary policy rules--on its head. The textbook reasoning goes something like this. Policymakers facing re-election have an incentive to pursue expansionary macroeconomic policies (positive aggregate demand shocks). This boosts their popularity, because people enjoy the lower unemployment and don't really notice or worry about the inflationary consequences.

Even an independent central bank operating under discretion faces the classic "dynamic inconsistency" problem if it tries to commit to low inflation, resulting in suboptimally high (expected and actual) inflation. So monetary policy rules (the topic of Cowen's piece) are, in theory, a way for the central bank to "bind its hands" and help it achieve lower (expected and actual) inflation. An alternative that is sometimes suggested is to appoint a central banker who is more inflation averse than the public. If the problem is that the public hates inflation, how is this a solution?

Cowen seems to argue that a monetary rule would be unpopular, and hence not fully credible, exactly when it calls for policy to be expansionary. But such a rule, in theory, would have been put into place to prevent policy from being too expansionary. Without such a rule, policy would presumably be more expansionary, so if voters hate high inflation, they would really hate removing the rule.

One issue that came up frequently at the Rethinking Macroeconomic Policy IV conference was the notion that inflationary bias, and the implications for central banking that come with it, might be a thing of the past. There is certainly something to that story in the recent low inflation environment. But I can still hardly imagine circumstances in which expansionary policy in a downturn would be the unpopular choice among voters themselves. It may be unpopular among members of Congress for other reasons-- because it is unpopular among select powerful constituents, for example-- but that is another issue. And the members of Congress who are most in favor of imposing a monetary policy rule for the Fed are also, I suspect, the most inflation averse, so I find it hard to see how the potentially inflationary nature of rules is what would (a) make them politically unpopular and (b) lead Congress to thus restrict the Fed's independence.

Congress insists that the Fed is “independent"...But if voters hated what the Fed was doing, Congress could rather rapidly hold hearings and exert a good deal of influence. Over time there is a delicate balancing act, where the Fed is reluctant to show it is kowtowing to Congress, so it very subtlety monitors its popularity so it doesn’t have to explicitly do so.

If we imposed a monetary rule on the Fed, even a theoretically optimal rule, it would stop the Fed from playing this political game. Many monetary rules call for higher rates of price inflation if the economy starts to enter a downturn. That’s often the right economic prescription, but voters hate high inflation.Emphasis added, and the emphasized bit is quoted by Scott Sumner, who argues that voters don't hate inflation per se, but hate falling standards of living. He adds, "How people feel about a price change depends entirely on whether it's caused by an aggregate supply shift or a demand shift."

The whole exchange made my head hurt a bit because it turns the usual premise behind macroeconomic policy design--and specifically, central bank independence and monetary policy rules--on its head. The textbook reasoning goes something like this. Policymakers facing re-election have an incentive to pursue expansionary macroeconomic policies (positive aggregate demand shocks). This boosts their popularity, because people enjoy the lower unemployment and don't really notice or worry about the inflationary consequences.

Even an independent central bank operating under discretion faces the classic "dynamic inconsistency" problem if it tries to commit to low inflation, resulting in suboptimally high (expected and actual) inflation. So monetary policy rules (the topic of Cowen's piece) are, in theory, a way for the central bank to "bind its hands" and help it achieve lower (expected and actual) inflation. An alternative that is sometimes suggested is to appoint a central banker who is more inflation averse than the public. If the problem is that the public hates inflation, how is this a solution?

Cowen seems to argue that a monetary rule would be unpopular, and hence not fully credible, exactly when it calls for policy to be expansionary. But such a rule, in theory, would have been put into place to prevent policy from being too expansionary. Without such a rule, policy would presumably be more expansionary, so if voters hate high inflation, they would really hate removing the rule.

One issue that came up frequently at the Rethinking Macroeconomic Policy IV conference was the notion that inflationary bias, and the implications for central banking that come with it, might be a thing of the past. There is certainly something to that story in the recent low inflation environment. But I can still hardly imagine circumstances in which expansionary policy in a downturn would be the unpopular choice among voters themselves. It may be unpopular among members of Congress for other reasons-- because it is unpopular among select powerful constituents, for example-- but that is another issue. And the members of Congress who are most in favor of imposing a monetary policy rule for the Fed are also, I suspect, the most inflation averse, so I find it hard to see how the potentially inflationary nature of rules is what would (a) make them politically unpopular and (b) lead Congress to thus restrict the Fed's independence.

Friday, October 13, 2017

Rethinking Macroeconomic Policy

I had the pleasure of attending “Rethinking Macroeconomic Policy IV” at the Peterson Institute for International Economics. I highly recommend viewing the panels and materials online.

The two-day conference left me wondering what it actually means to “rethink” macro. The conference title refers to rethinking macroeconomic policy, not macroeconomic research or analysis, but of course these are related. Adam Posen’s opening remarks expressed dissatisfaction with DSGE models, VARs, and the like, and these sentiments were occasionally echoed in the other panels in the context of the potentially large role of nonlinearities in economic dynamics. Then, in the opening session, Olivier Blanchard talked about whether we need a “revolution” or “evolution” in macroeconomic thought. He leans toward the latter, while his coauthor Larry Summers leans toward the former. But what could either of these look like? How could we replace or transform the existing modes of analysis?

I looked back on the materials from Rethinking Macroeconomic Policy of 2010. Many of the policy challenges discussed at that conference are still among the biggest challenges today. For example, low inflation and low nominal interest rates limit the scope of monetary policy in recessions. In 2010, raising the inflation target and strengthening automatic fiscal stabilizers were both suggested as possible policy solutions meriting further research and discussion. Inflation and nominal rates are still very low seven years later, and higher inflation targets and stronger automatic stabilizers are still discussed, but what I don’t see is a serious proposal for change in the way we evaluate these policy proposals.

Plenty of papers use basically standard macro models and simulations to quantify the costs and benefits of raising the inflation target. Should we care? Should we discard them and rely solely on intuition? I’d say: probably yes, and probably no. Will we (academics and think tankers) ever feel confident enough in these results to make a real policy change? Maybe, but then it might not be up to us.

Ben Bernanke raised probably the most specific and novel policy idea of the conference, a monetary policy framework that would resemble a hybrid of inflation targeting and price level targeting. In normal times, the central bank would have a 2% inflation target. At the zero lower bound, the central bank would allow inflation to rise above the 2% target until inflation over the duration of the ZLB episode averaged 2%. He suggested that this framework would have some of the benefits of a higher inflation target and of price level targeting without some of the associated costs. Inflation would average 2%, so distortions from higher inflation associated with a 4% target would be avoided. The possibly adverse credibility costs of switching to a higher target would also be minimized. The policy would provide the usual benefits of history-dependence associated with price level targeting, without the problems that this poses when there are oil shocks.

It’s an exciting idea, and intuitively really appealing to me. But how should the Fed ever decide whether or not to implement it? Bernanke mentioned that economists at the Board are working on simulations of this policy. I would guess that these simulations involve many of the assumptions and linearizations that rethinking types love to demonize. So again: Should we care? Should we rely solely on intuition and verbal reasoning? What else is there?

Later, Jason Furman presented a paper titled, “Should policymakers care whether inequality is helpful or harmful for growth?” He discussed some examples of evaluating tradeoffs between output and distribution in toy models of tax reform. He begins with the Mankiw and Weinzierl (2006) example of a 10 percent reduction in labor taxes paid for by a lump-sum tax. In a Ramsey model with a representative agent, this policy change would raise output by 1 percent. Replacing the representative agent with agents with the actual 2010 distribution of U.S. incomes, only 46 percent of households would see their after-tax income increase and 41 percent would see their welfare increase. More generally, he claims that “the growth effects of tax changes are about an order of magnitude smaller than the distributional effects of tax changes—and the disparity between the welfare and distribution effects is even larger” (14). He concludes:

As Posen then pointed out, Furman’s paper and his discussants largely ignored the discussions of macroeconomic stabilization and business cycles that dominated the previous sessions on monetary and fiscal policy. The panelists acceded that recessions, and hysteresis in unemployment, can exacerbate economic disparities. But the fact that stabilization policy was so disconnected from the initial discussion of inequality and growth shows just how much rethinking still has not occurred.

In 1987, Robert Lucas calculated that the welfare costs of business cycles are minimal. In some sense, we have “rethought” this finding. We know that it is built on assumptions of a representative agent and no hysteresis, among other things. And given the emphasis in the fiscal and monetary policy sessions on avoiding or minimizing business cycle fluctuations, clearly we believe that the costs of business cycle fluctuations are in fact quite large. I doubt many economists would agree with the statement that “the welfare costs of business cycles are minimal.” Yet, the public finance literature, even as presented at a conference on rethinking macroeconomic policy, still evaluates welfare effects of policy using models that totally omit business cycle fluctuations, because, within those models, such fluctuations hardly matter for welfare. If we believe that the models are “wrong” in their implications for the welfare effects of fluctuations, why are we willing to take their implications for the welfare effects of tax policies at face value?

I don’t have a good alternative—but if there is a Rethinking Macroeconomic Policy V, I hope some will be suggested. The fact that the conference speakers are so distinguished is both an upside and a downside. They have the greatest understanding of our current models and policies, and in many cases were central to developing them. They can rethink, because they have already thought, and moreover, they have large influence and loud platforms. But they are also quite invested in the status quo, for all they might criticize it, in a way that may prevent really radical rethinking (if it is really needed, which I’m not yet convinced of). (A more minor personal downside is that I was asked multiple times whether I was an intern.)

If there is a Rethinking Macroeconomic Policy V, I also hope that there will be a session on teaching and training. The real rethinking is going to come from the next generations of economists. How do we help them learn and benefit from the current state of economic knowledge without being constrained by it? This session could also touch on continuing education for current economists. What kinds of skills should we be trying to develop now? What interdisciplinary overtures should we be making?

The two-day conference left me wondering what it actually means to “rethink” macro. The conference title refers to rethinking macroeconomic policy, not macroeconomic research or analysis, but of course these are related. Adam Posen’s opening remarks expressed dissatisfaction with DSGE models, VARs, and the like, and these sentiments were occasionally echoed in the other panels in the context of the potentially large role of nonlinearities in economic dynamics. Then, in the opening session, Olivier Blanchard talked about whether we need a “revolution” or “evolution” in macroeconomic thought. He leans toward the latter, while his coauthor Larry Summers leans toward the former. But what could either of these look like? How could we replace or transform the existing modes of analysis?

I looked back on the materials from Rethinking Macroeconomic Policy of 2010. Many of the policy challenges discussed at that conference are still among the biggest challenges today. For example, low inflation and low nominal interest rates limit the scope of monetary policy in recessions. In 2010, raising the inflation target and strengthening automatic fiscal stabilizers were both suggested as possible policy solutions meriting further research and discussion. Inflation and nominal rates are still very low seven years later, and higher inflation targets and stronger automatic stabilizers are still discussed, but what I don’t see is a serious proposal for change in the way we evaluate these policy proposals.

Plenty of papers use basically standard macro models and simulations to quantify the costs and benefits of raising the inflation target. Should we care? Should we discard them and rely solely on intuition? I’d say: probably yes, and probably no. Will we (academics and think tankers) ever feel confident enough in these results to make a real policy change? Maybe, but then it might not be up to us.

Ben Bernanke raised probably the most specific and novel policy idea of the conference, a monetary policy framework that would resemble a hybrid of inflation targeting and price level targeting. In normal times, the central bank would have a 2% inflation target. At the zero lower bound, the central bank would allow inflation to rise above the 2% target until inflation over the duration of the ZLB episode averaged 2%. He suggested that this framework would have some of the benefits of a higher inflation target and of price level targeting without some of the associated costs. Inflation would average 2%, so distortions from higher inflation associated with a 4% target would be avoided. The possibly adverse credibility costs of switching to a higher target would also be minimized. The policy would provide the usual benefits of history-dependence associated with price level targeting, without the problems that this poses when there are oil shocks.

It’s an exciting idea, and intuitively really appealing to me. But how should the Fed ever decide whether or not to implement it? Bernanke mentioned that economists at the Board are working on simulations of this policy. I would guess that these simulations involve many of the assumptions and linearizations that rethinking types love to demonize. So again: Should we care? Should we rely solely on intuition and verbal reasoning? What else is there?

Later, Jason Furman presented a paper titled, “Should policymakers care whether inequality is helpful or harmful for growth?” He discussed some examples of evaluating tradeoffs between output and distribution in toy models of tax reform. He begins with the Mankiw and Weinzierl (2006) example of a 10 percent reduction in labor taxes paid for by a lump-sum tax. In a Ramsey model with a representative agent, this policy change would raise output by 1 percent. Replacing the representative agent with agents with the actual 2010 distribution of U.S. incomes, only 46 percent of households would see their after-tax income increase and 41 percent would see their welfare increase. More generally, he claims that “the growth effects of tax changes are about an order of magnitude smaller than the distributional effects of tax changes—and the disparity between the welfare and distribution effects is even larger” (14). He concludes:

“a welfarist analyzing tax policies that entail tradeoffs between efficiency and equity would not be far off in just looking at static distribution tables and ignoring any dynamic effects altogether. This is true for just about any social welfare function that places a greater weight on absolute gains for households at the bottom than at the top. Under such an approach policymaking could still be done under a lexicographic process—so two tax plans with the same distribution would be evaluated on the basis of whichever had higher growth rates…but in this case growth would be the last consideration, not the first” (16).

As Posen then pointed out, Furman’s paper and his discussants largely ignored the discussions of macroeconomic stabilization and business cycles that dominated the previous sessions on monetary and fiscal policy. The panelists acceded that recessions, and hysteresis in unemployment, can exacerbate economic disparities. But the fact that stabilization policy was so disconnected from the initial discussion of inequality and growth shows just how much rethinking still has not occurred.

In 1987, Robert Lucas calculated that the welfare costs of business cycles are minimal. In some sense, we have “rethought” this finding. We know that it is built on assumptions of a representative agent and no hysteresis, among other things. And given the emphasis in the fiscal and monetary policy sessions on avoiding or minimizing business cycle fluctuations, clearly we believe that the costs of business cycle fluctuations are in fact quite large. I doubt many economists would agree with the statement that “the welfare costs of business cycles are minimal.” Yet, the public finance literature, even as presented at a conference on rethinking macroeconomic policy, still evaluates welfare effects of policy using models that totally omit business cycle fluctuations, because, within those models, such fluctuations hardly matter for welfare. If we believe that the models are “wrong” in their implications for the welfare effects of fluctuations, why are we willing to take their implications for the welfare effects of tax policies at face value?

I don’t have a good alternative—but if there is a Rethinking Macroeconomic Policy V, I hope some will be suggested. The fact that the conference speakers are so distinguished is both an upside and a downside. They have the greatest understanding of our current models and policies, and in many cases were central to developing them. They can rethink, because they have already thought, and moreover, they have large influence and loud platforms. But they are also quite invested in the status quo, for all they might criticize it, in a way that may prevent really radical rethinking (if it is really needed, which I’m not yet convinced of). (A more minor personal downside is that I was asked multiple times whether I was an intern.)

If there is a Rethinking Macroeconomic Policy V, I also hope that there will be a session on teaching and training. The real rethinking is going to come from the next generations of economists. How do we help them learn and benefit from the current state of economic knowledge without being constrained by it? This session could also touch on continuing education for current economists. What kinds of skills should we be trying to develop now? What interdisciplinary overtures should we be making?

Thursday, September 28, 2017

An Inflation Expectations Experiment

Last semester, my senior thesis advisee Alex Rodrigue conducted a survey-based information experiment via Amazon Mechanical Turk. We have coauthored a working paper detailing the experiment and results titled "Household Informedness and Long-Run Inflation Expectations: Experimental Evidence." I presented our research at my department seminar yesterday with the twin babies in tow, and my tweet about the experience is by far my most popular to date:

As shown in the figure above, before receiving the treatments, very few respondents forecast 2% inflation over the long-run and only about a third even forecast in the 1-3% range. Over half report a multiple-of-5% forecast, which, as I argue in a recent paper in the Journal of Monetary Economics, is a likely sign of high uncertainty. When presented with a graph of the past 15 years of inflation, or with the FOMC statement announcing the 2% target, the average respondent revises their forecast around 2 percentage points closer to the target. Uncertainty also declines.

The results are consistent with imperfect information models because the information treatments are publicly available, yet respondents still revise their expectations after the treatments. Low informedness is part of the reason why expectations are far from the target. The results are also consistent with Bayesian updating, in the sense that high prior uncertainty is associated with larger revisions. But equally noteworthy is the fact that even after receiving both treatments, expectations are still quite heterogeneous and many still substantially depart from the target. So people seem to interpret the information in different ways and view it as imperfectly credible.

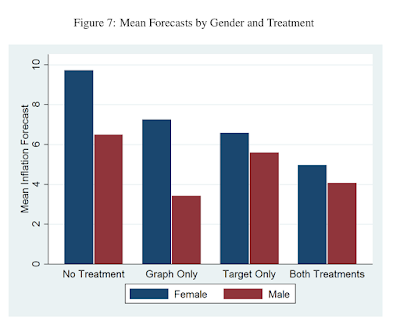

We look at how treatment effects vary by respondent characteristic. One interesting result is that, after receiving both treatments, the discrepancy between mean male and female inflation expectations (which has been noted in many studies) nearly disappears (see figure below).

There is more in the paper about how treatment effects vary with other characteristics, including respondents' opinion of government policy and their prior knowledge. We also look at whether expectations can be "un-anchored from below" with the graph treatment.

Consumers' inflation expectations are very disperse; on household surveys, many people report long-run inflation expectations that are far from the Fed's 2% target. Are these people unaware of the target, or do they know it but remain unconvinced of its credibility? In another paper in the Journal of Macroeconomics, I provide some non-experimental evidence that public knowledge of the Fed and its objectives is quite limited. In this paper, we directly treat respondents with information about the target and about past inflation, in randomized order, and see how they revise their reported long-run inflation expectations. We also collect some information about their prior knowledge of the Fed and the target, their self-reported understanding of inflation, and their numeracy and demographic characteristics. About a quarter of respondents knew the Fed's target and two-thirds could identify Yellen as Fed Chair from a list of three options.I gave a 90 minute econ seminar today with the twin babies, both awake. Held one and audience passed the other around. A first in economics?— Carola Conces Binder (@cconces) September 28, 2017

As shown in the figure above, before receiving the treatments, very few respondents forecast 2% inflation over the long-run and only about a third even forecast in the 1-3% range. Over half report a multiple-of-5% forecast, which, as I argue in a recent paper in the Journal of Monetary Economics, is a likely sign of high uncertainty. When presented with a graph of the past 15 years of inflation, or with the FOMC statement announcing the 2% target, the average respondent revises their forecast around 2 percentage points closer to the target. Uncertainty also declines.

The results are consistent with imperfect information models because the information treatments are publicly available, yet respondents still revise their expectations after the treatments. Low informedness is part of the reason why expectations are far from the target. The results are also consistent with Bayesian updating, in the sense that high prior uncertainty is associated with larger revisions. But equally noteworthy is the fact that even after receiving both treatments, expectations are still quite heterogeneous and many still substantially depart from the target. So people seem to interpret the information in different ways and view it as imperfectly credible.

We look at how treatment effects vary by respondent characteristic. One interesting result is that, after receiving both treatments, the discrepancy between mean male and female inflation expectations (which has been noted in many studies) nearly disappears (see figure below).

There is more in the paper about how treatment effects vary with other characteristics, including respondents' opinion of government policy and their prior knowledge. We also look at whether expectations can be "un-anchored from below" with the graph treatment.

Thursday, September 14, 2017

Consumer Forecast Revisions: Is Information Really so Sticky?

My paper "Consumer Forecast Revisions: Is Information Really so Sticky?" was just accepted for publication in Economics Letters. This is a short paper that I believe makes an important point.

Then I transform the data so that it is like the MSC data. First I round the responses to the nearest integer. This makes the updating frequency estimates decrease a little. Then I look at it at the six-month frequency instead of monthly. This makes the updating frequency estimates decrease a lot, and I find similar estimates to the previous literature-- updates about every 8 months.

So low-frequency data, and, to a lesser extent, rounded responses, result in large underestimates of revision frequency (or equivalently, overestimates of information stickiness). And if information is not really so sticky, then sticky information models may not be as good at explaining aggregate dynamics. Other classes of imperfect information models, or sticky information models combined with other classes of models, might be better.

Read the ungated version here. I will post a link to the official version when it is published.

Sticky information models are one way of modeling imperfect information. In these models, only a fraction (λ) of agents update their information sets each period. If λ is low, information is quite sticky, and that can have important implications for macroeconomic dynamics. There have been several empirical approaches to estimating λ. With micro-level survey data, a non-parametric and time-varying estimate of λ can be obtained by calculating the

fraction of respondents who revise their forecasts (say, for inflation) at each survey date. Estimates from the Michigan Survey of Consumers (MSC) imply that consumers update their information about inflation approximately once every 8 months.

Here are two issues that I point out with these estimates:

I show that several issues with estimates of information stickiness based on consumer survey microdata lead to substantial underestimation of the frequency with which consumers update their expectations. The first issue stems from data frequency. The rotating panel of Michigan Survey of Consumer (MSC) respondents take the survey twice with a six-month gap. A consumer may have the same forecast at months t and t+ 6 but different forecasts in between. The second issue is that responses are reported to the nearest integer. A consumer may update her information, but if the update results in a sufficiently small revisions, it will appear that she has not updated her information.To quantify how these issues matter, I use data from the New York Fed Survey of Consumer Expectations, which is available monthly and not rounded to the nearest integer. I compute updating frequency with this data. It is very high-- at least 5 revisions in 8 months, as opposed to the 1 revision per 8 months found in previous literature.

Then I transform the data so that it is like the MSC data. First I round the responses to the nearest integer. This makes the updating frequency estimates decrease a little. Then I look at it at the six-month frequency instead of monthly. This makes the updating frequency estimates decrease a lot, and I find similar estimates to the previous literature-- updates about every 8 months.

So low-frequency data, and, to a lesser extent, rounded responses, result in large underestimates of revision frequency (or equivalently, overestimates of information stickiness). And if information is not really so sticky, then sticky information models may not be as good at explaining aggregate dynamics. Other classes of imperfect information models, or sticky information models combined with other classes of models, might be better.

Read the ungated version here. I will post a link to the official version when it is published.

Monday, August 21, 2017

New Argument for a Higher Inflation Target

On voxeu.org, Philippe Aghion, Antonin Bergeaud, Timo Boppart, Peter Klenow, and Huiyu Li discuss their recent work on the measurement of output and whether measurement bias can account for the measured slowdown in productivity growth. While the work is mostly relevant to discussions of the productivity slowdown and secular stagnation, I was interested in a corollary that ties it to discussions of the optimal level of the inflation target.

The authors note the high frequency of "creative destruction" in the US, which they define as when "products exit the market because they are eclipsed by a better product sold by a new producer." This presents a challenge for statistical offices trying to measure inflation:

The authors note the high frequency of "creative destruction" in the US, which they define as when "products exit the market because they are eclipsed by a better product sold by a new producer." This presents a challenge for statistical offices trying to measure inflation:

The standard procedure in such cases is to assume that the quality-adjusted inflation rate is the same as for other items in the same category that the statistical office can follow over time, i.e. products that are not subject to creative destruction. However, creative destruction implies that the new products enter the market because they have a lower quality-adjusted price. Our study tries to quantify the bias that arises from relying on imputation to measure US productivity growth in cases of creative destruction.

They explain that this can lead to mismeasurement of TFP growth, which they quantify by examining changes in the share of incumbent products over time:

If the statistical office is correct to assume that the quality-adjusted inflation rate is the same for creatively destroyed products as for surviving incumbent products, then the market share of surviving incumbent products should stay constant over time. If instead the market share of these incumbent products shrinks systematically over time, then the surviving subset of products must have higher average inflation than creatively destroyed products. For a given elasticity of substitution between products, the more the market share shrinks for surviving products, the more the missing growth.

From 1983 to 2013, they estimate that "missing growth" averaged about 0.63% per year. This is substantial, but there is no clear time trend (i.e. there is not more missed growth in recent years), so it can't account for the measured productivity growth slowdown.

The authors suggest that the Fed should consider adjusting its inflation target upwards to "get closer to achieving quality-adjusted price stability." A few months ago, 22 economists including Joseph Stiglitz and Narayana Kocherlakota wrote a letter urging the Fed to consider raising its inflation target, in which they stated:

Policymakers must be willing to rigorously assess the costs and benefits of previously-accepted policy parameters in response to economic changes. One of these key parameters that should be rigorously reassessed is the very low inflation targets that have guided monetary policy in recent decades. We believe that the Fed should appoint a diverse and representative blue ribbon commission with expertise, integrity, and transparency to evaluate and expeditiously recommend a path forward on these questions.

The letter did not mention this measurement bias rationale for a higher target, but the blue ribbon commission they propose should take it into consideration.

Friday, August 18, 2017

The Low Misery Dilemma

The other day, Tim Duy tweeted:

The misery index is the sum of unemployment and inflation. Arthur Okun proposed it in the 1960s as a crude gauge of the economy, based on the fact that high inflation and high unemployment are both miserable (so high values of the index are bad). The misery index was pretty low in the 60s, in the 6% to 8% range, similar to where it has been since around 2014. Now it is around 6%. Great, right?

The NYT article notes that we are in an opposite situation to the stagflation of the 1970s and early 80s, when both high inflation and high unemployment were concerns. The misery index reached a high of 21% in 1980. (The unemployment data is only available since 1948).

Very high inflation and high unemployment are each individually troubling for the social welfare costs they impose (which are more obvious for unemployment). But observed together, they also troubled economists for seeming to run contrary to the Phillips curve-based models of the time. The tradeoff between inflation and unemployment wasn't what economists and policymakers had believed, and their misunderstanding probably contributed to the misery.

Though economic theory has evolved, the basic Phillips curve tradeoff idea is still an important part of central bankers' models. By models, I mean both the formal quantitative models used by their staffs and the way they think about how the world works. General idea: if the economy is above full employment, that should put upward pressure on wages, which should put upward pressure on prices.

So low unemployment combined with low inflation seem like a nice problem to have, but if they are indeed a new reality-- that is, something that will last--then there is something amiss in that chain of logic. Maybe we are not at full employment, because the natural rate of unemployment is a lot lower than we thought, or we are looking at the wrong labor market indicators. Maybe full employment does not put upward pressure on wages, for some reason, or maybe we are looking at the wrong wage measures. For example, San Francisco Fed researchers argue that wage growth measures should be adjusted in light of retiring Baby Boomers. Or maybe the link between wage and price inflation has weakened.

Until policymakers feel confident that they understand why we are experiencing both low inflation and low unemployment, they can't simply embrace the low misery. It is natural that they will worry that they are missing something, and that the consequences of whatever that is could be disastrous. The question is what to do in the meanwhile.

There are two camps for Fed policy. One camp favors a wait-and-see approach: hold rates steady until we actually observe inflation rising above 2%. Maybe even let it stay above 2% for awhile, to make up for the lengthy period of below-2% inflation. The other camp favors raising rates preemptively, just in case we are missing some sign that inflation is about to spiral out of control. This latter possibility strikes me as unlikely, but I'm admittedly oversimplifying the concerns, and also haven't personally experienced high inflation.

It took me a moment--and I'd guess I'm not alone--to even recognize how remarkable this is. The New York Times ran an article with the headline "Fed Officials Confront New Reality: Low Inflation and Low Unemployment." Confront, not embrace, not celebrate.I expect the FOMC minutes to reveal that some participants were concerned about low inflation while others focused on low unemployment.— Tim Duy (@TimDuy) August 16, 2017

The misery index is the sum of unemployment and inflation. Arthur Okun proposed it in the 1960s as a crude gauge of the economy, based on the fact that high inflation and high unemployment are both miserable (so high values of the index are bad). The misery index was pretty low in the 60s, in the 6% to 8% range, similar to where it has been since around 2014. Now it is around 6%. Great, right?

The NYT article notes that we are in an opposite situation to the stagflation of the 1970s and early 80s, when both high inflation and high unemployment were concerns. The misery index reached a high of 21% in 1980. (The unemployment data is only available since 1948).

Very high inflation and high unemployment are each individually troubling for the social welfare costs they impose (which are more obvious for unemployment). But observed together, they also troubled economists for seeming to run contrary to the Phillips curve-based models of the time. The tradeoff between inflation and unemployment wasn't what economists and policymakers had believed, and their misunderstanding probably contributed to the misery.

Though economic theory has evolved, the basic Phillips curve tradeoff idea is still an important part of central bankers' models. By models, I mean both the formal quantitative models used by their staffs and the way they think about how the world works. General idea: if the economy is above full employment, that should put upward pressure on wages, which should put upward pressure on prices.

So low unemployment combined with low inflation seem like a nice problem to have, but if they are indeed a new reality-- that is, something that will last--then there is something amiss in that chain of logic. Maybe we are not at full employment, because the natural rate of unemployment is a lot lower than we thought, or we are looking at the wrong labor market indicators. Maybe full employment does not put upward pressure on wages, for some reason, or maybe we are looking at the wrong wage measures. For example, San Francisco Fed researchers argue that wage growth measures should be adjusted in light of retiring Baby Boomers. Or maybe the link between wage and price inflation has weakened.

Until policymakers feel confident that they understand why we are experiencing both low inflation and low unemployment, they can't simply embrace the low misery. It is natural that they will worry that they are missing something, and that the consequences of whatever that is could be disastrous. The question is what to do in the meanwhile.

There are two camps for Fed policy. One camp favors a wait-and-see approach: hold rates steady until we actually observe inflation rising above 2%. Maybe even let it stay above 2% for awhile, to make up for the lengthy period of below-2% inflation. The other camp favors raising rates preemptively, just in case we are missing some sign that inflation is about to spiral out of control. This latter possibility strikes me as unlikely, but I'm admittedly oversimplifying the concerns, and also haven't personally experienced high inflation.

Thursday, August 10, 2017

Macro in the Econ Major and at Liberal Arts Colleges

Last week, I attended the 13th annual Conference of Macroeconomists from Liberal Arts Colleges, hosted this year by Davidson College. I also attended the conference two years ago at Union College. I can't recommend this conference strongly enough!

The conference is a response to the increasing expectation of high quality research at many liberal arts colleges. Many of us are the only macroeconomist at our college, and can't regularly attend macro seminars, so the conference is a much-needed opportunity to receive feedback on work in progress. (The paper I presented last time just came out in the Journal of Monetary Economics!)

This time, I presented "Inflation Expectations and the Price at the Pump" and discussed Erin Wolcott's paper, "Impact of Foreign Official Purchases of U.S.Treasuries on the Yield Curve."

There was a wide range of interesting work. For example, Gina Pieters presented “Bitcoin Reveals Unofficial Exchange Rates and Detects Capital Controls.” M. Saif Mehkari's work on “Repatriation Taxes” is highly relevant to today's policy discussions. Most of the presenters and attendees were junior faculty members, but three more senior scholars held a panel discussion at dinner. Next year, the conference will be held at Wake Forest.

I also attended a session on "Macro in the Econ Major" led by PJ Glandon. A link to his slides is here. One slide presented the image below, prompting an interesting discussion about whether and how we should tailor what is taught in macro courses to our perception of the students' interests and career goals.

There was a wide range of interesting work. For example, Gina Pieters presented “Bitcoin Reveals Unofficial Exchange Rates and Detects Capital Controls.” M. Saif Mehkari's work on “Repatriation Taxes” is highly relevant to today's policy discussions. Most of the presenters and attendees were junior faculty members, but three more senior scholars held a panel discussion at dinner. Next year, the conference will be held at Wake Forest.

I also attended a session on "Macro in the Econ Major" led by PJ Glandon. A link to his slides is here. One slide presented the image below, prompting an interesting discussion about whether and how we should tailor what is taught in macro courses to our perception of the students' interests and career goals.

Monday, August 7, 2017

Labor Market Conditions Index Discontinued

A few years ago, I blogged about the Fed's new Labor Market Conditions Index (LMCI). The index attempts to summarize the state of the labor market using a statistical technique that captures the primary common variation from 19 labor market indicators. I was skeptical about the usefulness of the LMCI for a few reasons. And as it turns out, the LMCI is now discontinued as of August 3.

The discontinuation is newsworthy because the LMCI was cited in policy discussions at the Fed, even by Janet Yellen. The index became high-profile enough that I was even interviewed about it on NPR's Marketplace.

One issue that I noted with the index in my blog was the following:

One issue that I noted with the index in my blog was the following:

A minor quibble with the index is its inclusion of wages in the list of indicators. This introduces endogeneity that makes it unsuitable for use in Phillips Curve-type estimations of the relationship between labor market conditions and wages or inflation. In other words, we can't attempt to estimate how wages depend on labor market tightness if our measure of labor market tightness already depends on wages by construction.This corresponds to one reason that is provided for the discontinuation of the index: "including average hourly earnings as an indicator did not provide a meaningful link between labor market conditions and wage growth."

The other reasons provided for discontinuation are that "model estimates turned out to be more sensitive to the detrending procedure than we had expected" and "the measurement of some indicators in recent years has changed in ways that significantly degraded their signal content."

I also noted in my blog post and on NPR that the index is almost perfectly correlated with the unemployment rate, meaning it provides very little additional information about labor market conditions. (Or interpreted differently, meaning that the unemployment rate provides a lot of information about labor market conditions.) The development of the LMCI was part of a worthy effort to develop alternative informative measures of labor market conditions that can help policymakers gauge where we are relative to full employment and predict what is likely to happen to prices and wages. So since resources and attention are limited, I think it is wise that they can be directed toward developing and evaluating other measures.

Thursday, July 27, 2017

The Obesity Code and Economists as General Practitioners

"The past generation, like several generations before it, has indeed been one of greater and greater specialization…This advance has not been attained without cost. The price has been the loss of minds, or the neglect to develop minds, trained to cope with the complex problems of today in the comprehensive, overall manner called for by such problems.”

The above quote may sound like a recent criticism of economics, but it actually comes from a 1936 article, "The Need for `Generalists,'" by A. G. Black, Chief of the Bureau of Agricultural Economics, in the Journal of Farm Economics (p. 657). Just 12 years prior, John Maynard Keynes' penned his much-quoted description of economists for a 1924 obituary of Alfred Marshall:

“The study of economics does not seem to require any specialized gifts of an unusually high order. Is it not, intellectually regarded, a very easy subject compared with the higher branches of philosophy or pure science? An easy subject at which few excel! The paradox finds its explanation, perhaps, in that the master-economist must possess a rare combination of gifts. He must be mathematician, historian, statesman, philosopher—in some degree. He must understand symbols and speak in words. He must contemplate the particular in terms of the general and touch abstract and concrete in the same flight of thought. He must study the present in the light of the past for the purposes of the future. No part of man’s nature or his institutions must lie entirely outside his regard” (p. 321-322).While Keynes celebrated economist-as-generalist, Black's complaint about the trend of overspecialization, coupled with excessive "mathiness" and insularity, continues. This often-fair criticism frequently comes from within the profession--because who loves thinking about economists more than economists? But at the same time, there is a trend in the opposite direction, a trend towards taking an increasing scope of nature and institutions into regard. Nowhere is this more obvious than among the blogging, tweeting, punditing economists with whom I associate.

The other day, for example, Miles Kimball wrote a bunch of tweets about the causes of obesity. When I liked one of his tweets (because it suggested cheese is not bad for you, and how could I not like that?) he asked if I would read "The Obesity Code" by Jason Fung, which he blogged about earlier, and respond to the evidence.

I was quick to agree. Only later did I pause to consider the irony that I feel more confident in my ability to evaluate scholarship from a medical field in which I have zero training than I often feel when asked to review scholarship or policies in my field of supposed expertise, monetary economics. Am I being a master-economist à la Keynes, or simply irresponsible?

Kenneth Arrow's 1963 "Uncertainty and the Welfare Economics of Healthcare" is credited with the birth of health economics. In this article, Arrow notes that he is concerned only with the market and non-market institutions of medical services, and not health itself. But since then, health economics has broadened in scope, and incorporates the study of actual health outcomes (like obesity), to both acclaim and criticism. See my earlier post about economics research on depression, or consider the extremely polarized ratings of economist Emily Oster's book on pregnancy (which I like very much).

Jason Fung himself is not an obesity researcher, but rather a physician who specializes in end-stage kidney disease requiring dialysis. The foreward to his book remarks, "His credentials do not obviously explain why he should author a book titled The Obesity Code," before going on to justify his decision. So, while duly aware of my limited credentials, I feel willing to at least comment on the book and point out many of its parallels with macroeconomic research.

The Trouble with Accounting Identities (and Counting Calories)

Fung's book takes issue with the dominant "Calories In/Calories Out" paradigm for weight loss. This idea-- that to lose weight, you need to consume fewer calories than you burn-- is based on the First Law of Thermodynamics: energy can neither be created nor destroyed in an isolated system. Fung obviously doesn't dispute the Law, but he disputes its application to weight loss, premised on "Assumption 1: Calories In and Calories Out are independent of each other."

Fung argues that in response to a reduction in Calories In, the body will reduce Calories Out, citing a number of studies in which underfed participants started burning far fewer calories per day, becoming cold and unable to concentrate as the body expended fewer resources on heating itself and on brain functioning.

In economics, an accounting identity is an equality that by definition or construction must be true. Every introductory macro course teaches national income accounting: GDP=C+I+G+NX. What happens, we may ask, if government spending (G) increases by $1000? Most students would probably guess that GDP would also increase by $1000, but this is relying on a ceteris parabis assumption. If consumption (C), investment (I), and net exports (NX) stay the same when G rises, then yes, GDP must rise by $1000. But if C, I, or NX is not independent of G, the response of GDP could very well be quite different. For example, in the extreme case that the government spending completely crowds out private investment, so I falls by $1000 (with no change in C or NX), then GDP will not change at all.

The First Law of Thermodynamics is also an accounting identity. It is true that if Calories In exceed Calories Out, we will gain weight, and vice versa. But it is not true that reducing Calories In leaves Calories Out unchanged. And according to Fung, Calories Out may respond so strongly to Calories In, almost one-for-one, that sustained weight loss will not occur.

Proximate and Ultimate Causes

A caloric deficit (Calories In < Calories Out) is a proximate cause of weight loss, and a caloric surplus a proximate cause of weight gain. But proximate causes are not useful for policy prescriptions. Think again about the GDP=C+I+G+NX accounting identity. This tells us that we can increase GDP by increasing consumption. Great! But how can we increase consumption? We need to know the deeper determinants of the components of GDP. Telling a patient to increase her caloric deficit to lose weight is as practical as advising a government to boost consumption to achieve higher GDP, and neither effort is likely to be very sustainable. So most of Fung's book is devoted to exploring what he claims are the ultimate causes of body weight and the policy implications that follow.

Set Points

One of the most important concepts in Fung's book is the "set point" for body weight. This is the weight at which the body "wants" be be; efforts to sustain weight loss are unlikely to persist, as the body returns to its set weight by reducing basal energy expenditure.

An important question is what determines the set point. A second set of questions surrounds what happens away from the set point. In other words, what are the mechanisms by which homeostasis occurs? In macro models, too, we may focus on finding the equilibrium and on the off-equilibrium dynamics. The very idea that the body has a set point is controversial, or at least counterintuitive, as is the existence of certain set points in macro, especially the natural rate of unemployment.

The set point, according to Fung, is all about insulin. Reducing insulin levels and insulin resistance allows fat burning (lipolysis) so the body has plenty of energy coming in without the need to lower basal metabolism; this is the only way to reduce the set point. The whole premise of his hormonal obesity theory rests on this. It is the starting point for his explanation of why obesity occurs and what to do about it. Obesity occurs when the body's set point gradually increases over time, as insulin levels and insulin resistance rise in a "vicious cycle." So we need to understand the mechanisms behind the rise and the dynamics of this cycle.

Mechanisms and Models

Fung goes into great detail about the workings of insulin and related hormones and their interactions with glucose and fructose. This background all aims to support his proposals about the causes of obesity. The causes are multifactorial, but mostly have to do with the composition and timing of the modern diet (what and when we eat). Culprits include refined and processed foods, added sugar, and emphasis on low fat/high carb diets, high cortisol levels from stress and sleep deprivation, and frequent snacking. Fung cites dozens of empirical studies, some observational and others controlled trials, to support his hormonal obesity theory.

Here I am not entirely sure how closely to draw a parallel to economics. Macroeconomists also rely on models that lay out a series of mechanisms, and use (mostly) observational and (rarely) experimental data to test them, and like epidemiological researchers face challenges of endogeneity and omitted variable bias. But are biological mechanisms inherently different than economic ones because they are more observable, stable, and falsifiable? My intuition says yes, but I don't know enough about medical and biological research to be sure. Fung does not discuss the research behind scientists' knowledge of how hormones work, but only the research on health and weight outcomes associated with various nutritional strategies and drugs.

At the beginning of the book, Fung announces his refusal to even consider animal studies. This somewhat surprises me, as I thought that finding a result consistently across species could strengthen our conclusions, and mechanisms are likely to be similar, but he seems to view animal studies as totally uninformative for humans. If that is true, then why do we use animals in medical research at all?

Persistence Creates Resistance

So how does the body's set point rise enough that a person becomes obese? Fung claims that the Calories In/Calories Out model neglects the time-dependence of obesity, noting that it is much easier for a person who has been overweight for only a short while to lose weight. If someone has been overweight a long time, it is much harder, because they have developed insulin resistance. Insulin levels normally rise and fall over the course of the day, not normally causing any problem. But persistently high levels of insulin, a hormonal imbalance, result in insulin resistance, leading to yet higher levels of insulin, and yet greater insulin resistance (and weight gain). Fung uses the cycle of antibiotic resistance as an analogy for the development of insulin resistance:

Exposure causes resistance...As we use an antibiotic more and more, organisms resistance to it are naturally selected to survive and reproduce. Evenually, these resistance organisms dominate, and the antibiotic becomes useless (p. 110).He also uses the example of drug resistance: a cocaine user needs ever greater doses. "Drugs cause drug resistance" (p. 111). Macroeconomics provides its own metaphors. In the early conception of the Phillips Curve, it was believed that the inverse relationship between unemployment and inflation could be exploited by policymakers. Just as a doctor who wants to cure a bacterial infection may prescribe antibiotics, a policymaker who wants lower unemployment must just tolerate a little higher inflation. But the trouble with following such a policy is that as that higher inflation persists, people's expectations adapt. They come to expect higher inflation in the future, and that expectation is self-fulfilling, so it takes yet higher inflation to keep unemployment at or below its "set point."

Institutional Interest and Influence

How a field evaluates evidence and puts it into practice--and even what research is funded and publicized--depends on the powerful institutions in the field and their vested interests, even if the interest is merely in saving face. According to Fung, the American Heart Association (AHA), snack food companies, and doctors repeatedly ignored evidence against the low-fat low-carb diet and the Calories In/Calories Out model to make money or save face. His criticisms of the AHA, in particular, are reminiscent of those against the IMF for the policies it has imposed through the conditions of its loans.

Of course, the critics themselves may have biases or vested interests. Fung himself quite likely neglected to mention a number of studies that did not fit his theory. In an effort to sell books and promote his website and reputation, he very likely is oversimplifying and projecting more-than-warranted confidence. So how do I evaluate the book overall, and will I follow its recommendations for myself and my family?

First, while the book's title emphasizes obesity, it doesn't seem to be written only for readers who are or are becoming obese. It is not clear whether the recommendations presented in this book are useful for people who are already maintaining a healthy weight, but he certainly never suggests otherwise. And for a book so focused on hormones, he makes shockingly little distinction between male and female dietary needs and responses. Since I am breastfeeding twins, and am a still-active former college athlete, my hormonal balance and dietary needs must be far from average, and I'm not looking to lose weight. He also doesn't make much distinction between the needs of adults and kids (like my toddler).

Still, despite the fact that he presents himself as destroying the conventional wisdom on weight loss, most of his advice is unlikely to be controversial: eat whole foods, reduce added sugar, don't fear healthy fats. Before reading this book, I already knew I should try to do that, though sometimes chose not to. I was especially focused on nutrition during my twin pregnancy, and most of the advice was basically equivalent. After reading this book, I'm slightly more motivated, as I have somewhat more evidence as to why it is beneficial, and I still don't see how it could hurt. Other advice is less supported, but at least not likely to be harmful: avoid artificial sweeteners (even Stevia), eat vinegar.

His support of fasting and snack avoidance, and his views on insulin provision to diabetics, seem the least supported and most likely to be harmful. He says that fasting and skipping snacks and breakfast provides recurrent periods of very low insulin levels, reducing insulin resistance, but I don't see any concrete evidence of the length of time you must wait in between eating to reap benefits. He cites ancient Greeks, like Hippocrates of Kos, and a few case studies, as "evidence" of the benefits of fasting. Maybe it is my own proclivity for "grazing," and my observations of my two-year-old when we skip a snack, that makes me skeptical. This may work for some, but I'm in no rush to try it.

Friday, July 7, 2017

New Publication: Measuring Uncertainty Based on Rounding

For the next few weeks, you can download my new paper in the Journal of Monetary Economics for free here. The title is "Measuring uncertainty based on rounding: New method and application to inflation expectations." It became available online the same day that my twins were born (!!) but was much longer in the making, as it was my job market paper at Berkeley.

Here is the abstract:

Here is the abstract:

The literature on cognition and communication documents that people use round numbers to convey uncertainty. This paper introduces a method of quantifying the uncertainty associated with round responses in pre-existing survey data. I construct micro-level and time series measures of inflation uncertainty since 1978. Inflation uncertainty is countercyclical and correlated with inflation disagreement, volatility, and the Economic Policy Uncertainty index. Inflation uncertainty is lowest among high-income consumers, college graduates, males, and stock market investors. More uncertain consumers are more reluctant to spend on durables, cars, and homes. Round responses are common on many surveys, suggesting numerous applications of this method.

Wednesday, May 31, 2017

Low Inflation at "Essentially Full Employment"

Yesterday, Brad Delong took issue with Charles Evans' recent claim that "Today, we have essentially returned to full employment in the U.S." Evans, President of the Federal Reserve Bank of Chicago and a member of the FOMC, was speaking before the Bank of Japan Institute for Monetary and Economic Studies in Tokyo on "lessons learned and challenges ahead" in monetary policy. Delong points out that the age 25-54 employment-to-population ratio in the United States of 78.5% is low by historical standards and given social and demographic trends.

Evans' claim that the U.S. has returned to full employment is followed by his comment that "Unfortunately, low inflation has been more stubborn, being slower to return to our objective. From 2009 to the present, core PCE inflation, which strips out the volatile food and energy components, has underrun 2% and often by substantial amounts." Delong asks,

As Christopher Erceg and Andrew Levin explain, a recession of moderate size and severity does not prompt many departures from the labor market, but long recessions can produce quite pronounced declines in labor force participation. In their model, this gradual response of labor force participation to the unemployment rate arises from high adjustment costs of moving in and out of the formal labor market. But the Great Recession was protracted enough to lead people to leave the labor force despite the adjustment costs. According to their analysis:

Erceg and Levin also discuss implications for monetary policy design, considering the consequences of responding to the cyclical component of the LFPR in addition to the unemployment rate.

Evans' claim that the U.S. has returned to full employment is followed by his comment that "Unfortunately, low inflation has been more stubborn, being slower to return to our objective. From 2009 to the present, core PCE inflation, which strips out the volatile food and energy components, has underrun 2% and often by substantial amounts." Delong asks,

And why the puzzlement at the failure of core inflation to rise to 2%? That is a puzzle only if you assume that you know with certainty that the unemployment rate is the right variable to put on the right hand side of the Phillips Curve. If you say that the right variable is equal to some combination with weight λ on prime-age employment-to-population and weight 1-λ on the unemployment rate, then there is no puzzle—there is simply information about what the current value of λ is.It is not totally obvious why prime-age employment-to-population should drive inflation distinctly from unemployment--that is, why Delong's λ should not be zero, as in the standard Phillips Curve. Note that the employment-to-population ratio grows with the labor force participation rate (LFPR) and declines with the unemployment rate. Typically, labor force participation is mostly acyclical: its longer run trends dwarf any movements at the business cycle frequency (see graph below). So in a normal recession, the decline in the employment-to-population ratio is mostly attributable to the rise in the unemployment rate, not the fall in LFPR (so it shouldn't really matter if you simply impose λ=0).

|

| https://fred.stlouisfed.org/series/LNS11300060 |

cyclical factors can fully account for the post-2007 decline of 1.5 percentage points in the LFPR for prime-age adults (i.e., 25–54 years old). We define the labor force participation gap as the deviation of the LFPR from its potential path implied by demographic and structural considerations, and we find that as of mid-2013 this gap stood at around 2%. Indeed, our analysis suggests that the labor force gap and the unemployment gap each accounts for roughly half of the current employment gap, that is, the shortfall of the employment-to-population rate from its precrisis trend.Erceg and Levin discuss their results in the context of the Phillips Curve, noting that "a large negative participation gap induces labor force participants to reduce their wage demands, although our calibration implies that the participation gap has less influence than the unemployment rate quantitatively." This means that both unemployment and labor force participation enter the right hand side of the Phillips Curve (and Delong's λ is nonzero), so if a deep recession leaves the LFPR (and, accordingly, the employment-to-population ratio) low even as unemployment returns to its natural rate, inflation will still remain low.

Erceg and Levin also discuss implications for monetary policy design, considering the consequences of responding to the cyclical component of the LFPR in addition to the unemployment rate.

We use our model to analyze the implications of alternative monetary policy strategies against the backdrop of a deep recession that leaves the LFPR well below its longer run potential level. Specifically, we compare a noninertial Taylor rule, which responds to inflation and the unemployment gap to an augmented rule that also responds to the participation gap. In the simulations, the zero lower bound precludes the central bank from lowering policy rates enough to offset the aggregate demand shock for some time, producing a deep recession; once the shock dies away sufficiently, policy responds according to the Taylor rule. A key result of our analysis is that monetary policy can induce a more rapid closure of the participation gap through allowing the unemployment rate to fall below its longrun natural rate. Quite intuitively, keeping unemployment persistently low draws cyclical nonparticipants back into labor force more quickly. Given that the cyclical nonparticipants exert some downward pressure on inflation, some undershooting of the long-run natural rate actually turns out to be consistent with keeping inflation stable in our model.While the authors don't explicitly use the phrase "full employment," their paper does provide a rationale for the low core inflation we're experiencing despite low unemployment. Erceg and Levin's paper was published in the Journal of Money, Credit, and Banking in 2014; ungated working paper versions from 2013 are available here.

Tuesday, May 2, 2017

Do Socially Responsible Investors Have It All Wrong?

Fossil fuels divestment is a widely debated topic at many college campuses, including my own. The push, often led by students, to divest from fossil fuels companies is an example of the socially responsible investing (SRI) movement. SRI strategies seek to promote goals like environmental stewardship, diversity, and human rights through portfolio management, including the screening of companies involved with objectionable products or behaviors.

It seems intuitive that the endowment of a foundation of educational institution should not invest in a firm whose activities oppose the foundation's mission. Why would a charity that fights lung cancer invest in tobacco, for example? But in a recent Federal Reserve Board working paper, "Divest, Disregard, or Double Down?", Brigitte Roth Tran suggests that intuition may be exactly backwards. She explains that "if firm returns increase with activities the endowment combats, doubling down on the investment increases expected utility by aligning funding availability with need. I call this 'mission hedging.'"

Returning to the example of the lung-cancer-fighting charity, suppose that the charity is heavily invested in tobacco. If the tobacco industry does unexpectedly well, then the charity will get large returns on its investments precisely when its funding needs are greatest (because presumably tobacco use and lung cancer rates will be up).

Roth Tran uses the Capital Asset Pricing Model to show that this mission hedging strategy "increases expected utility when endowment managers boost portfolio weights on firms whose returns correlate with activities the foundation seeks to reduce." More specifically,

"foundations that do not account for covariance between idiosyncratic risk and marginal utility of assets will generally under-invest in high covariance assets. Because objectionable firms are more likely to have such covariance, firewall foundations will underinvest in these firms by disregarding the mission in the investment process. SRI foundations will tend to underinvest in these firms even more by avoiding them altogether."Roth Tran acknowledges that there are a number of reasons that mission hedging is not the norm. First, the foundation may experience direct negative utility from investing in a firm it considers reprehensible-- or experience a "warm glow" from divesting from such a firm. Second, the foundation may worry that investing in an objectionable firm will hurt its fundraising efforts or reputation (if donors do not understand the benefits of mission hedging). Third, the foundation may believe that divestment will directly lower the levels of the objectionable activity, though this effect is likely to be very small. Roth Tran points out that student leaders of the Harvard fossil fuel divestment campaign acknowledged that the financial impact on fossil fuel companies would be negligible.

Monday, April 17, 2017

EconTalk on the Economics of Pope Francis

Russ Roberts recently interviewed Robert Whaples on the EconTalk podcast, which I have listened to regularly for years. I was especially interested when I saw the title of this episode, The Economics of Pope Francis, both because I am a Catholic and because I generally find Roberts' discussions of religion (from his Jewish perspective) interesting and so articulate that they help me clarify my own thinking, even if my views diverge from his.

In this episode, Roberts and Whaples, an economics professor at Wake Forest and convert to Catholicism, discuss the Pope's 2015 encyclical Laudato Si, which focuses on environmental issues and issues of markets, capitalism, and inequality more broadly. Given Roberts' strong support of free markets in most circumstances, I was pleased and impressed that he did not simply dismiss the Pope's work as anti-market, as many have. Near the end of the episode, Roberts says:

when I think about people who are hostile to capitalism, per se, I would argue that capitalism is not the problem. It's us. Capitalism is, what it's really good at, is giving us what we want--more or less...And so, if you want to change capitalism, you've got to change us. And that's--I really see that--I like the Pope doing that. I'm all for that.

I agree with Roberts' point that one place where religious leaders have an important role to play in the economy is in guiding the religious toward changing, or at least managing, their desires. Whaples discusses this too, summarizing the encyclical as being mainly about people's excessive focus on consumption:

It's mainly on the--the point we were talking about before, consuming too much. It's exhortation. He is basically saying what has been said by the Church for the last 2000 year...Look, you don't need all this stuff. It's pulling you away from the ultimate ends of your life. You are just pursuing it and not what you are meant, what you were created by God to pursue. You were created by God to pursue God, not to pursue this Mammon stuff.

Roberts and Whaples both agree that a lot of problems that are typically blamed on market capitalism could be improved if people's desires changed, and that religion can play a role in this (though they acknowledge that some non-religious people also turn away from materialism for various reasons.) Roberts' main criticism of the encyclical, however, is that: "The problem is the document has got too much other stuff there...it comes across as an institutional indictment, and much less an indictment of human frailty."